[slideshare id=28983267&doc=georgefinal-131206221722-phpapp01]

Last week, I was honored to be one of the judges at the Lean Impact Summit in San Francisco where 15 nonprofits, start-ups, and social entrepreneurs shared ignite style presentations about how they applied lean start up principles. The event kicked off with an overview of lean impact by Dominique Aubry of Lean Startup Machine and a provocative series of conversations between funders/investors and grantees/startups. The day ended with a talk by Christie George, New Media Ventures, about the need for more risk capital (funding that makes it okay for a project to fail and learn).

The winners of the awards were Black Girls Code and Taroworks But as I listened to the presentations from the pitch speakers — the one thing they were all experts at doing was applying validated learning which is a key principle of the lean start up as Erie Ries explained to me:

The point is that you need to go lean and fast. Here’s the method. Define the problem from your stakeholder’s point of view. Use human centered design principles, not your arrogance of thinking you know what works for your audience without testing. Good testing begins with a hypothesis and collecting data to understand if you are right or wrong. Don’t let your vision become delusional. Science is a science – and it is hard. But, we revere Einstein for his creativity. His creativity: I have a theory and I’m going to test it. Remember that when you use the scientific method, your experiment is only as good as your hypothesis. You have a vision and then you creates a hypothesis and you collect data to prove it right or wrong.

Rebecca Masisak from TechSoup Global shared a presentation about how their organization has integrated lean start up principles, sharing their “Canvas” which is a thinking tool to help you lay out your hypotheses in different business areas and then test them. You can read more about it how it works from Steve Blank in this HBR article and the app Strategyzer.

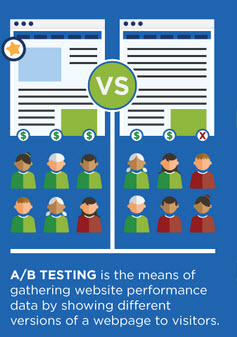

I was thinking about how nonprofits might start to apply some of this thinking and not have it be too overwhelming. Certainly, nonprofit marketers could use it in the marketing channels and customer relationship blocks to set up a hypothesis and do experiments – and use what they learn to get better results. A/B testing is one technique and you don’t have to be a math genius to apply it. Here are the simple rules for nonprofit marketers as explained in this article in Social Media Today:

- Start with a hypothesis. This could be as simple as “If we post more links on Facebook with the embedded link tool, we will increase our reach” or any of the items that Mari Smith suggests you measure on Facebook. You could pick any channel, social or otherwise to set up an a/b test hypothesis.

- Establish A Singular Variable: Don’t try to test more than one variable at a time – just pick one thing to test. The focus of your test will be challenging this one thing, everything else needs to remain the same during your testing. In the example above, you would test posting the same link with the embedded link tool and without it.

- Use Scientific Methods: These include having a significant sample size, randomly selected groups and some split testing.

- Document Your Results: One excellent way to document your results is to write a quick report – a couple of paragraphs discussing your hypothesis, how you did your test, and the final results. End your rapid report with potential remedies, suggested follow-up testing or even open it up for debate from others on staff so as to collect insight into your hypothesis. Here’s some best practices for sharing results.

- Don’t Over Test: Keep it simple and don’t over do it. Here’s some guidelines for how long to run your test.

Those are some great general principles, but how might you apply this to testing your digital marketing channels if you’re a nonprofit? You are most likely to be testing email, social media, mobile, web site, and landing pages (fundraising, etc). Luckily, Hubspot just published this awesome guide, one of the clearest and easiest to understand how-tos on A/B testing for Beginners. It offers specific examples of what to test and mini-case studies. You can test:

- Color: It invokes feelings, which in turn sets the emotional mood for acceptance or rejection by the viewer. It’s a subjective and culturally influenced unconscious and involuntary response. Colors, when used in pleasing combinations, can attract attention and also make other elements easier or more difficult to comprehend – whether text, objects or images. Testing different combinations allows you to maximize the results you want to get. Here is a case study of Red versus Green.

- Images: Images convey more than mere text, shapes, and colors. They help set the tone — serious, playful, clinical, provocative —instantly establishing an emotional connection with the viewer. Or setting up a barrier you may never overcome. Which is why testing different images to see which ones yield the best results can make the difference between a campaign that gets results or a total dud. Don’t just guess. Test. It isn’t a matter of that “photos do well on Facebook, it is which photo.” More about testing images on Facebook, see this useful article.

- Words: Language is tricky. Shades of meaning hide around every corner. Cultural nuances abound. Verbal fads and memes come andgo in the blink of an eye. Being sensitive to your stakeholder’s mindset is crucial for successful communication, as is being clear about what you convey. That’s why testing different words and phrases can be some of the most complex tests you do in pursuit of achieving the greatest possible results. Bob Filbin from DoSomething says they test language all the time, especially for the call to action – learn more versus sign up. Read more here.

- $ Variables: In any online donation transaction, there are always key “moments of truth.” Moments when the decision to donate is made. One thing to test is the dollar amounts. This can be done simply with experiments of minimum donation levels, or asking for specific amount in the CTA. Here’s some more ideas.

- Layout: Testing text versus graphic layout will help you understand how to improve results. Here’s more about how nonprofits have a/b tested layout.

There are also platforms that help you design and implement a/b experiments like Optimizely.

For nonprofits and other overachievers, there is one more really important variable to test and that is the amount of time invested in implementing a strategy. Can you get the same results if it takes you less time to create and execute? Here’s an article on that topic.

How are you using experiments, a/b testing, and validated learning to improve your nonprofit’s impact? Share an example of your experiment in the comments.