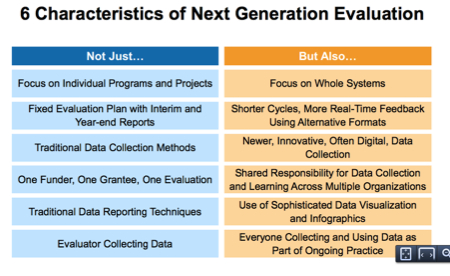

Last month, FSG and Stanford Social Innovation Review hosted a meeting with nonprofit leaders, grantmakers, evaluators, and other interested organizations and individuals to explore the possibilities for learning and evaluation in the social sector called “Next Generation Evaluation.” The conference was framed around the question: Given the convergence of networks and big data and the need for more innovation, what evaluation methods should be used to evaluate social change outcomes along side traditional methods?

The opening keynote by Hallie Preskill, Managing Director, FSG talked about the trends setting the stage for new evaluation methods, but also cautioned that these methods would not replace traditional methods. She gave an overview of the three different methods that would be discussed in more detail throughout the day. These methods included: Developmental Evaluation, Shared Measurement, and Big Data. These approaches have the potential to change how nonprofits and funders view of evaluation in a significant way. You can read more about the framing leading up the convening in this Learning Brief that synthesizes FSG’s latest thinking on the future of social sector evaluation.

There deep dive sessions for each method. You can view the videos and presentations on the conference materials page. I followed the developmental evaluation thread most closely. Here are my notes.

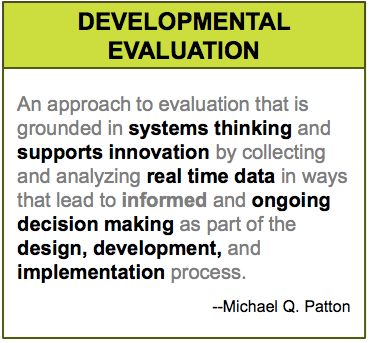

Kathy Brennan, Research and Evaluation Advisor, AARP gave primer about developmental evaluation – what it is, how it is applied, how it different from other methods, and why it is useful in evaluating complex systems projects. You can listen to the video or view her slides here. Here are my notes and takeaways.

Developmental Evaluation was invented by Michael Q. Patton who is often referred to as the “Grandfather of Developmental Evaluation” and any starting point for understanding the methods begins with his book, “Developmental Evaluation.” I was lucky enough to meet and interview Patton in 2009. His thinking about developmental evaluation resonated with me:

- Evaluation needs to be relevant and meaningful. It isn’t a horrible alien thing that punishes people and makes judgments.

- Evaluation should not be separate from what we do. We need to integrate evaluation thinking into our everyday work so we can improve what we’re doing. Evaluation is not an add-on task.

- Need a culture of inquiry, sharing what works, what doesn’t. A willingness to engage about what to do to make your program better.

- Evaluation is not about getting to a best practice that can be spread around the world in a standardized way and to answer the question, “Is everyone following the recipe?”

- Program development has to be ongoing, emergent. It isn’t a pharmacy metaphor of finding a pill to solve the problem.

- Real-Time Feedback/Evaluation is different from development evaluation which is directed towards a purpose to do something. Police use real-time evaluation to allocate their resources. For example, if crime increases in a neighborhood, they know how to allocate patrols.

- Developmental evaluation speeds up the feedback loop.

Patton’s ideas and the methods of developmental evaluation are gaining more attention and practice because social change projects are transcending from a primary focus on program impact to systems impact and make use of networks as a key strategy. Systems are non-linear, complex, and dynamic. But on the other hand, traditional evaluation models are:

- Focused on model testing

- Have a clear, linear chain of cause effect

- Measure success against predetermined outcomes

- Based on a logic model

- Follow a fixed plan

- Utilize external neutral evaluator

- Refine a model or make definitive judgment

The above slide was used to illustrate the iterative nature of a developmental evaluation of a complex systems social change initiative. It reminded me of “lean start up” methods – the process of discovery, iteration, and pivoting to the right strategy. Brennan put it into a nonprofit context. Developmental evaluation was used when:

The initiative is innovating and in development. The program was exploring, creating, and emerging. It can be characterized by:

- Implementors are experimenting with different approaches and activities

- Degree of uncertainty

- New questions, challenges, and opportunities for success are emerging

Brennan noted that when a program that is trying change a system is in this stage – the logic model is not the navigational star.

Brennan shared some of the core techniques of developmental evaluation which included:

1. Before Action Review/ After Action Review

2. Meaning Making–Cross department interpretation of data in real-time

3. Key Informant/Stakeholder Surveys and Interviews

These techniques are often applied to measuring social media and networks and making sense of the data.

In the afternoon breakout session, James Radner, Assistant Professor, University of Toronto presented “Frontiers of Innovation: A Case Study in Using Developmental Evaluation to Improve Outcomes for Vulnerable Children.” (Video and Slides). The program involved multi-stakeholder Community of Purpose: Researchers, Policymakers, Practitioners, Community members with a goal to catalyze major breakthroughs in strategies that support healthy development, beginning in the early years, applying knowledge in science, policy, and practice.

The evaluation methods included an annual cycle of stakeholder feedback, videos, documents; network mapping; meaning-making sessions; annual evaluation reports and an annual “Green Papers,” The annual green papers were a reflection by program stakeholders on how to pivot. Throughout the evaluation the evaluator and manager’s roles evolved and they iterated on the evaluation design. In this way, the evaluator is more of a critical friend and part of the feedback loop versus judge.

One of the findings that I found most interesting was that while the evaluators were looking at the overall program design, that reflection and reflective practice was “fractal” and became a part of the culture for all stakeholders. That learning and pivoting was embedded in the strategy.

I am left wondering if it is possible for a nonprofit to embrace some of the techniques and methods for learning and pivoting into its everyday work? How does your nonprofit embrace reflective practice and learning?